Human Bias On Trial, AI Wins

Where is the future of trust and fairness coming from? People would rather rely on an AI judge for a ‘fair ruling’ over a human one. Is that a sign of disillusionment, tech optimism, or both?

The Next Big Think! will send you one number, two insights, and three links weekly to keep you ahead of societal shifts.

#WomenNweed

57% of women ages 21-44 are using cannabis.

Does that surprise you? Women say they primarily use cannabis for therapeutic reasons, such as relieving anxiety (60%), helping to sleep (58%), and relieving pain (53%).

When you pair that with our theSkimm State of Women report, showcasing 71% of Millennial women said it’s their job to be the Chief Worry Officer (CWO) – explicitly and implicitly tasked with the mental load of planning for every contingency at work and at home. It adds up.

Source: Harris MedMen featured in Forbes, Harris theSkimm: State of Women report

2 Insights

#1. Human Bias On Trial, AI Wins

What:

America's judicial system is so rife with predictable bias nearly half of all Americans would trust an AI judge for a fair hearing over a human one. Why? Most question the impartiality of our judicial system, expressing doubt about whether Americans can get a fair hearing in a court of law. People are hopeful AI will help mitigate and counter some of these issues. However, as we discuss later, AI isn’t a neutral party; it’s still ‘people programming’ and codifying our belief systems into a machine that reflects our values back to us.

Big shout out to Danielle Summerlin, who conducted this research and helped write it up.

What the data tells us:

All Americans distrust the courts and think it’s a biased system of winners and losers.

Winners: Majorities say that courts give special treatment to the ultra-wealthy (55%), celebrities (54%), and political leaders (48%)

Losers: About half of Americans think the judicial system is biased against those with prior offenses (49%) and undocumented immigrants (45%)

Black and Hispanic Americans report experiencing the most mistreatment from the courts. Black Americans report a 25% higher rate of mistreatment than White Americans. *Note the AAPI numbers are under 100, so we are showcasing them as directional.

8 in 10 of all Americans, think “Our judicial system needs to fundamentally change in order to provide unbiased justice to all” (79%)

What’s the change people are looking for?

Representation of community + AI as a source of fairness

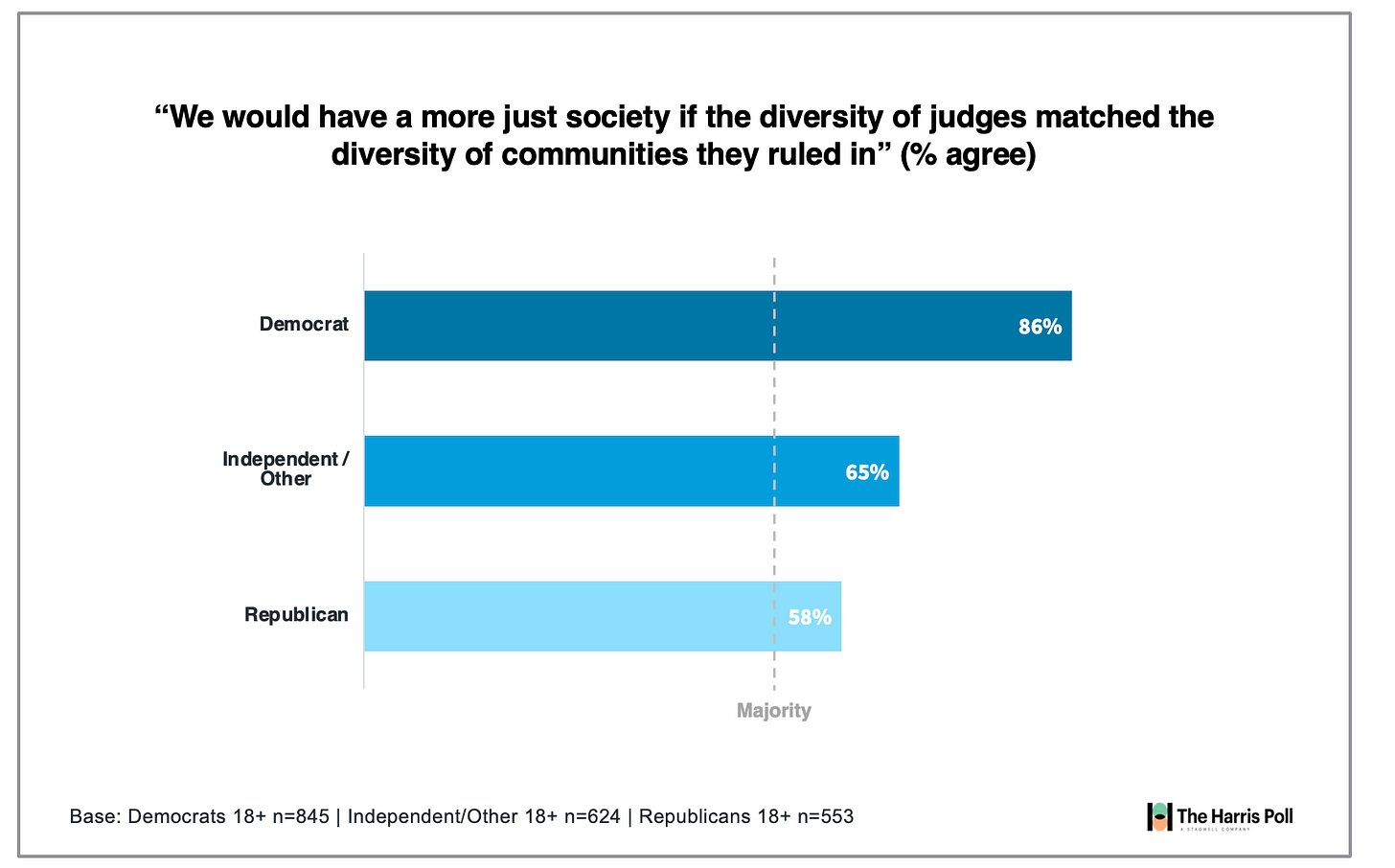

Most people across political affiliations believe that if judges better matched the diversity of the community they serve, we would have a more just society.

Similar to the wide divide in mistreatment gaps, Black and Hispanic Americans are much more likely to trust an AI judge over a human judge than White Americans.

Majorities think an AI system would be more equitable; what does that say about our system? Is it that humans are biased, but machines are not, or are human judges so biased now that we would rather take our chances with a machine?

What to think about:

While the Supreme Court faced a falling out with the public over the overturning of Roe v. Wade last year, now abortion access, transgender rights, and censorship issues are playing out in state-level courts. The discontent directed at the Supreme Court could (or already has) spread to the system as a whole. While the possibilities for AI to alleviate some issues foster hope, more fundamental reform is needed to repair trust between the public and the judicial system.

#2. AI Adoption + Optimism Ramps Up, But At What Cost? #ISO: Socially Just Imaginaries

What:

Speaking of AI, we’ve been closely monitoring the adoption rate of ChatGPT. Below we highlight the increases in month-over-month adoption and dramatic shifts in optimism surrounding ChatGPT. Americans’ initial impressions are only half of the insight; the other half lies in the implications of what widespread AI could mean to society.

What the data tells us:

From January to March —> 🧐 Knowledge of ChatGPT increased by 11%

From January to March —>🌎 People are more optimistic and less afraid of ChatGPT, especially people of color

From January to March —>🤔 People believe ChatGPT will allow more space for critical thinking and less mundane tasks, especially employed Americans

What to think about:

Originally we had many implications here, for example, what happens when everyone becomes a “baby engineer (e.g., prompt gurus ).”

However, after reading Race After Technology by Princeton professor Ruha Benjamin (thank you, Dory for the recommendation), we thought it would be better to draw attention to the implications of this book.

Benjamin’s book argues that technology can’t save us if we don’t save ourselves first. Her point is that technology isn’t neutral; it’s designed, developed, and maintained by people, and people have biases. If we hold technology on a pedestal of neutrality, we ignore the fact that we may unintentionally reinforce the racial, gender, and socioeconomic inequities that already persist today. And worse, we might make those inequities more efficient by using AI to spot, sort, and code them faster.

Consider her point about the danger of being “coded.” In California, 42 babies were placed on its gang affiliation watchlist

Benjamin writes: So far, no one has ventured to explain how this could have happened except by saying that some combination of zip codes and racially coded names constitutes a risk. Once someone is added to the database, whether they know they are listed or not, they undergo even more surveillance and lose a number of rights. Most important, then, is the fact that once something or someone is coded, this can be hard to change.

Later in the book, which you should read for yourself, she highlights how big data codifies the past and highlights steps we need to move forward.

“As Cathy O’Neil writes, “Big Data processes codify the past. They do not invent the future. Doing that requires moral imagination, and that’s something only humans can provide,” then what we need is greater investment in socially just imaginaries.

This would have to entail a socially conscious approach to tech development that would require prioritizing equity over efficiency and social good over market imperatives. Given the importance of training sets in machine learning, another set of interventions would require designing computer programs from scratch and training AI “like a child,” so as to make us aware of social bias.

The key is that all this takes time and intention, which runs against the rush to innovate that pervades the ethos of tech marketing campaigns. But, if we are not simply “users” but people committed to building a more just society, it is vital that we demand a slower and more socially conscious innovation.”

Consider her thinking in conjunction with Americans’ willingness to trust AI judges, especially Black and Hispanic Americans, who the system has most mistreated.

As we mentioned in our previous post, tech leaders, academics, and scientists have already called for a pause on the “out-of-control race”.

Meanwhile, businesses must use this time to build road maps of ethical and responsible AI, advocating for transparency, equity, and ‘socially just imaginaries’ to build a better future for all.

3 Links

A message for male CEOs on return to office from a Wall Street women’s leader(CNBC)—“Who’s missing from here? If we want to go back to the way it was, then acknowledge that you know it works mainly if you’re a man and have a wife at home.” Ellevest CEO Sallie Krawcheck

The Fortune Cookie Industry Is in Upheaval. ‘Expect Big Changes Ahead.’(WSJ)

The age of average (Alex Murrell)

Curiosity is contagious; if you like this newsletter, please share it!!

Penned by Libby Rodney and Abbey Lunney, founders of the Thought Leadership Group at The Harris Poll. To learn more about the Thought Leadership Practice, just contact one of us or find out more here.